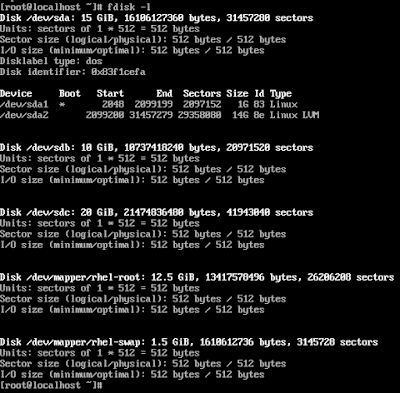

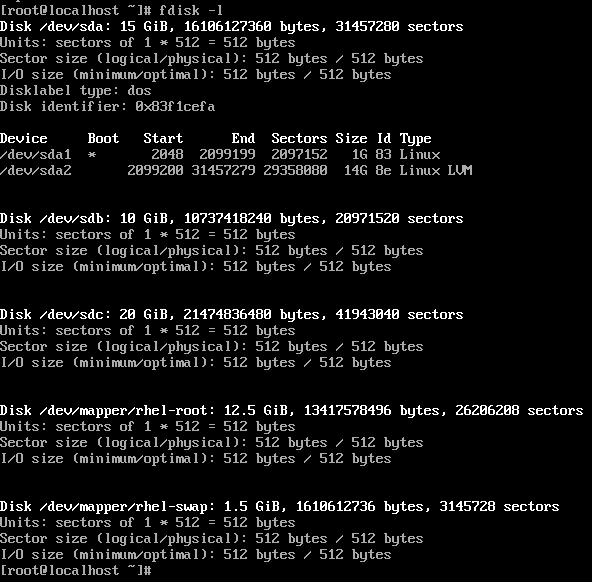

Displaying total disks available in the node. The LVM is performed on sdb and sdc. Step -1 Creating Physical Volume (PV) for sdb and sdc Step-2 Creating Volume Group (VG) from the PVs created earlier Step-3 Creating Logic Volumes (LV) from the Volume Group Step-4 The LV created should be formatted so that it can be used Step-5 This is the Hadoop cluster report before mounting LV Step-6 The LV can be used after it is mounted Step-7 Now we can see an increase in storage, this is from LV Step-8 The LV is extended without any interruption in the cluster Step-9 We can again see an increase in the storage size of the cluster #arthbylw #vimaldaga #righteducation #educationredefine #rightmentor #worldrecordholder #ARTH #linuxworld #makingindiafutureready #righeudcation #docker #webserver #elasticity #lvm